Curiosity Gets An AI Upgrade

11:57 minutes

Among the many hurdles NASA scientists face when conducting research with Mars rover Curiosity, one stands out: time. It takes 24 minutes for scientists to send instructions to Curiosity, a delay that puts constraints on the pace of scientific research. But since May 2016, NASA has been testing out an autonomous system, named AEGIS (Autonomous Exploration for Gathering Increased Science), on the rover. It saves NASA time by letting Curiosity decide which rocks to sample and what data to send back to Earth. Raymond Francis, an engineer at NASA’s Jet Propulsion Laboratory, joins Ira to discuss the rover’s autonomous upgrade.

Raymond Francis is an engineer at NASA’s Jet Propulsion Laboratory in Pasadena, California,

IRA FLATOW: This is Science Friday. I’m Ira Flatow. A bit later, a new approach to vaccines and a menu of ways we can enjoy our food better, using just sounds, textures, and different kinds of utensils.

But first, until recently the typical day for Mars Rover Curiosity went something like this– power up, travel to a new location, sample the chemical makeup of the surrounding terrain. But before it could call on its laser to blast a piece of rock to test it, it had to wait for nearly 48 minutes, which is the time it takes for NASA to receive a signal from the Rover and then sent back its instructions. Pretty tedious.

But since May 2016, NASA has been testing an autonomous system on Curiosity, letting the Rover decide which rocks to sample and what data to send back to Earth, which raises the question, if you can make Curiosity even smarter and able to make its own decision, does it need NASA’s hand-holding anymore?

Well, that’s a question we’re going to ask my next guest, Raymond Francis, engineer at NASA JPL. And he led the team that deployed AEGIS. That’s the name of the new AI system to Mars. Welcome to Science Friday.

RAYMOND FRANCIS: Hello.

IRA FLATOW: Hey there. So tell us, what does this new AI software allow Curiosity to do?

RAYMOND FRANCIS: So Aegis allows two different things– autonomous refinement of pointing errors, when people on the ground are trying to point at a very fine target, and sometimes there are challenges in hitting it accurately with ChemCam’s remote laser spectrometer, and also autonomous target selection where the Rover chooses for itself without ground in the loop at all rocks to measure with ChemCam.

IRA FLATOW: Can it make its own decision which rock to zap with its laser before asking back at HQ?

RAYMOND FRANCIS: That’s exactly what it does, yeah. And so, given certain parameters from the science team and authority to go do so, the Rover can drive into a new place, pick the rock that best matches what the science team asked for, and immediately zap it with the LIBS system.

IRA FLATOW: So that must save an awful amount of time because I would imagine most of the time it’s just sitting there waiting for those instructions.

RAYMOND FRANCIS: Yeah, and in fact, to get around the constant waiting we actually typically upload a whole day’s worth of activities at once. But that still means that after it drives into a new terrain in the afternoon, there’s still plenty of daylight and time for science, but Earth hasn’t seen it yet. And that’s when AEGIS really shines.

IRA FLATOW: So is it in operation now? Is that what’s happening now? Is it permanent?

RAYMOND FRANCIS: Yeah. We uploaded it at the end of 2015, installed it, checked it out over the following winter, and then in May of 2016 we gave this software system as a tool to the mission science team to use as they saw fit. And they’ve been using it regularly for over a year now.

IRA FLATOW: So how does it– give me a little bit of inside baseball on the AI. How does it work? What is it know that is an interesting rock, for example?

RAYMOND FRANCIS: So it starts with a computer vision system. It takes a photograph, usually with the stereo navigation cameras aboard the Rover. And then it can process the image and look for interesting geological features.

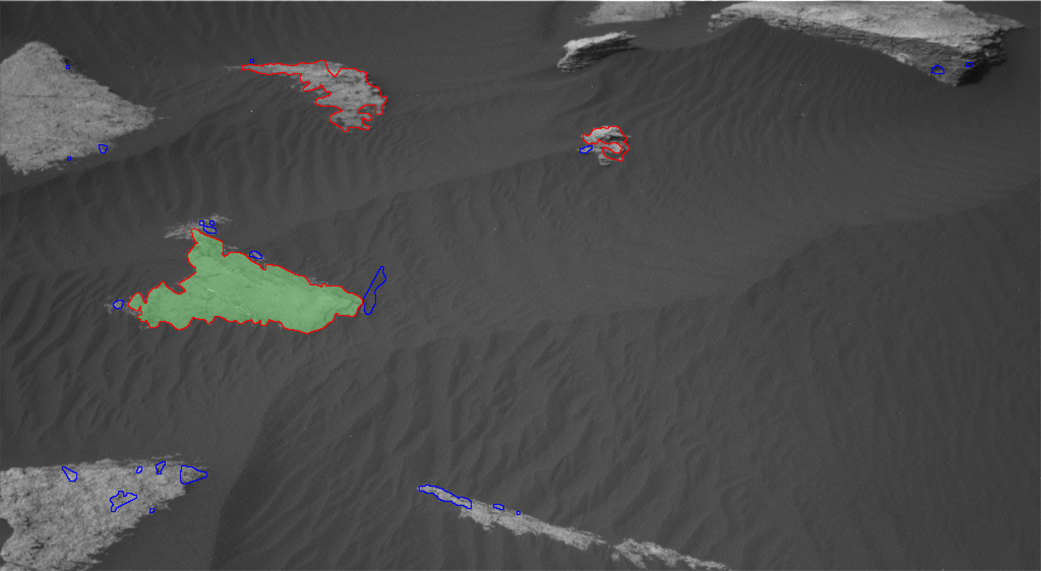

The way it does that is it starts with an edge finder that looks for sharp changes in contrast. And then it tries to find groups of those edges that it can enclose into a loop. And when you have a closable contour like that, or a nearly closable contour, you’ve probably found a distinct object. And in a scene on Mars, that’s probably a rock.

[LAUGHTER]

Yeah. There’s not much else.

IRA FLATOW: Unless it’s looking at itself, right?

RAYMOND FRANCIS: That’s right. And in fact, it’s very important that it not pick targets on the Rover– that it pick the rocks, both for science and for safety.

IRA FLATOW: I’ll bet that laser is pretty strong.

RAYMOND FRANCIS: It is. Yeah. We can put two gigawatts per square centimeter into the target, and that’s enough to vaporize rock. We certainly wouldn’t want to do that on the Rover itself.

IRA FLATOW: Yeah. Keep going. Tell us what it does.

RAYMOND FRANCIS: Yeah. So of course, it finds those closable contours and then the next step is to look at the groups of pixels that are inside each contour– what we would call a target– and look at the properties of those. Are they bright or dark? Are they smooth and consistent? Or is it a mottled, inconsistent texture?

Are they large? Are they close? Are they far? What’s the shape? All of those features are things that you can derive relatively simply from the image once you’ve identified the targets.

And then we encode geological interestingness as an algebraic linear combination of those pixel properties. So some combination of bright, and dark, and small, and far away– these things can be used to recognize different types of geological materials.

IRA FLATOW: So how good– how smart has it been at picking out the right thing to zap?

RAYMOND FRANCIS: It’s actually been performing very well, I think even better than we had hoped. One of the things the science team asked us to focus on was to look preferentially for pieces of rock outcrop, like bedrock that’s still in the place where it formed, after the drives.

And in the area we’re in, we managed to find a set of computer vision settings which allow it to do that with well over 90% reliability. Most of the time it picks the most desired target material and when it doesn’t, it picks something that’s almost as good.

IRA FLATOW: Now, Curiosity must be pretty old in terms of when it was first on the drawing board, right?

RAYMOND FRANCIS: Yeah. I mean, the Rover launched in– yeah, Rover launched in 2011 and it’s been in design for years before that.

IRA FLATOW: So I would imagine the hardware on there is not as robust as our iPhones, or something like that.

RAYMOND FRANCIS: Yeah. So it’s robust in the sense that it’s hardened against radiation, but it’s not powerful like the fancy processors you have in your desktops or your cell phones these days.

The flight computer that we use runs at 133 megahertz, and we have access to 16 megabytes of RAM. So this is your best desktop computer of 1993 or so, or your cell phone of 2002.

IRA FLATOW: Wow. That’s amazing. So I would imagine, then, you cannot upgrade the intelligence of the Curiosity much more because you don’t have the on-board hardware and software to make it really smart.

RAYMOND FRANCIS: Yeah. I mean, it’s kind of surprising that we can do what we do already. And in fact, we had to make some compromises in terms of performance at finding geological features versus performance in terms of speed.

One of the things, for example, is the stereo computation because we have to find the distance to all these targets to correctly focus the laser. And that stereo computation is very expensive, and we learned to slim it down to run as fast as possible.

IRA FLATOW: And I find it amazing that you can do all of this with the rocks, and it doesn’t even have color eyes.

RAYMOND FRANCIS: That’s right. Yeah. The nav cams are black and white grayscale cameras.

IRA FLATOW: Do you have plans, then– I guess there’s not enough room on this to make it fully autonomous to drive on its own.

RAYMOND FRANCIS: Do you mean, like autonomous navigation? Because that is actually something that we do have.

IRA FLATOW: Yeah?

RAYMOND FRANCIS: Yeah. The Rover is able to autonomously navigate over short distances.

IRA FLATOW: And how do you make it better? I know everybody’s thinking about the Mars 2020 mission. And that stuff must be on the drawing board. Can you give us some ideas of the parameters of that device?

RAYMOND FRANCIS: Yeah. So in the next mission, we will have a marginally better computer.

IRA FLATOW: Just marginally?

RAYMOND FRANCIS: It’s not a leap ahead from the same thing. It’s actually hard with space computers because everything depends on the computer, and that computer has to work in challenging environments, and no one wants to be the first to have their multibillion dollar mission rely on a new, unproven computer.

So we have something that’s just a little bit better, but it isn’t a generation newer. But we do have new algorithms in development, and at least the current version will certainly be able to run in 2020. And we’ll have color nav cams.

IRA FLATOW: So your grand visions for improving AI performance would be just refining what you’re doing now, but doing it better?

RAYMOND FRANCIS: So I think, yeah. We have some new ideas about how to find targets. I described that edge and loop strategy.

We have some new ideas for what we might do there. And with color, we will have more information to work with. But also, I think there’s other avenues.

For example, allowing the Rover to reschedule activities to better fit in these kinds of autonomous science targets and better manage its resources on board to help the team on Earth simplify their planning. I think these are where we’re going next, I think.

IRA FLATOW: The crucial question for you– do we have a name yet for this new Rover?

RAYMOND FRANCIS: The new Rover? No. But stay tuned because I think NASA will be looking for ways to choose that name in the not too distant future.

IRA FLATOW: Now, we can help you out with that here on Science Friday.

RAYMOND FRANCIS: Oh, you have some ideas?

IRA FLATOW: We’ll get you a name. You know, we have two million listeners. We can come up with a few names, I’m sure.

RAYMOND FRANCIS: And you know what? Maybe that’s an approach.

IRA FLATOW: And so you’re very happy, then? How about the health of it? Is Curiosity in good shape? I know the other Rovers are sort of– they’re old and they’re showing their age.

RAYMOND FRANCIS: They are. And of course, it’s a difficult environment to be in. You would show your age too if you’d been wandering around in that terrain for five years. MSL– the Curiosity Rover– is by and large working really well, and the instruments are pretty healthy.

There’s been some attention to the wear on the wheels, but they’re actually holding up pretty well as we’ve learned to better use them in our terrain. So yeah. I think we’re pretty confident that the Rover will keep going for some time yet.

IRA FLATOW: And give us an exact position where it is on Mars now.

RAYMOND FRANCIS: So we’re inside Gale Crater, the same place we landed. That crater is 150ish kilometers across, so we’re never going to leave. We’re going to do all of our mission inside that crater.

We drove across the more or less flat plain of the crater floor towards the mound in the middle, and that mound is like 4.5 kilometers high– it’s quite a mountain, really. And we’re now exploring the lowest layers of that mountain, looking into this sedimentary rocks that describe the history of this environment.

And we’re actually coming up on an area that we had identified from orbit as containing interesting minerals that probably formed in a wet environment. And so we’re just approaching this place. And expect more interesting results as we see this– what may be a transition in the environmental history of Earth rock formation in this environment.

IRA FLATOW: You mean because of the wetness of the area?

RAYMOND FRANCIS: Yeah. We see from orbit this ridge of material that appears to contain minerals like hematite. And so this is different from the terrain that we’ve been driving on so far. So as we approach it, we’ll get a real view for the first time of what it’s really made of.

IRA FLATOW: All right. We’ll be following along with you, Dr. Francis. Thank you for taking time to be with us, and good luck.

RAYMOND FRANCIS: Yeah. Thank you.

IRA FLATOW: You’re welcome. We’ll be helpful with that name thing if you ever need us. So let us know. Raymond Francis, is an engineer at NASA’s Jet Propulsion Laboratory. He led the team that deployed the AEGIS, the new AI system to Mars.

Copyright © 2017 Science Friday Initiative. All rights reserved. Science Friday transcripts are produced on a tight deadline by 3Play Media. Fidelity to the original aired/published audio or video file might vary, and text might be updated or amended in the future. For the authoritative record of ScienceFriday’s programming, please visit the original aired/published recording. For terms of use and more information, visit our policies pages at http://www.sciencefriday.com/about/policies/

Katie Feather is a former SciFri producer and the proud mother of two cats, Charleigh and Sadie.